This had me scratching my head for awhile.

At first I thought that glGetRenderbufferParameterivOES would properly detect Retina screen at 960×640 but it keeps returning 480×320.

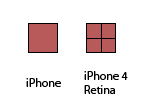

A little explanation on Retina screen first. Older devices have 320×480 screen resolution. With new iPhone 4 and iPod Touch 4G the screen has 640×960 but on the same physical area. This means that each pixel is 4 times as small.

To properly simulate older games resolutions iOS will replace each of your 320×480 game pixel by 4, this way your game will look identical.

![]()

So the API handles this differently. If you developed for iPad you notice that glGetRenderbufferParameterivOES return 1024×768 but not retina displays. Instead you have to check it yourself:

int w = 320;

int h = 480;

float ver = [[[UIDevice currentDevice] systemVersion] floatValue];

// You can't detect screen resolutions in pre 3.2 devices, but they are all 320x480

if (ver >= 3.2f)

{

UIScreen* mainscr = [UIScreen mainScreen];

w = mainscr.currentMode.size.width;

h = mainscr.currentMode.size.height;

}

if (w == 640 && h == 960) // Retina display detected

{

// Set contentScale Factor to 2

self.contentScaleFactor = 2.0;

// Also set our glLayer contentScale Factor to 2

CAEAGLLayer *eaglLayer = (CAEAGLLayer *)self.layer;

eaglLayer.contentsScale=2; //new line

}

Easy right? Remember, you must do this before calling glGetRenderbufferParameterivOES.

For touch positions just multiply each position by the scale factor.

I’ve only tested this on the simulator since I don’t own an iPhone 4 but it should work and detect either you are on a regular or retina screen.